There is no end to what all human beings can feasibly do, but having said that, nothing beats our ability to improve at a consistent pace. This unwavering commitment towards getting better, no matter the situation, has really enabled the world to clock some huge milestones, with technology emerging as quite a major member of the group. The reason why we hold technology in such a high regard is, by and large, predicated upon its skill-set, which guided us towards a reality that nobody could have ever imagined otherwise. Nevertheless, if we look beyond the surface for one hot second, it will become abundantly clear how the whole runner was also very much inspired from the way we applied those skills across a real world environment. The latter component, in fact, did a lot to give the creation a spectrum-wide presence, and as a result, initiated a full-blown tech revolution. Of course, the next thing this revolution did was to scale up the human experience through some outright unique avenues, but even after achieving a feat so notable, technology will somehow continue to bring forth the right goods. The same has turned more and more evident in recent times, and assuming one new discovery ends up with the desired impact, it will only put that trend on a higher pedestal moving forward.

The researching team at Purdue University has successfully developed a device, which draws inspiration from the human eye to improve computer vision. You see, at the moment, robotic or autonomous devices have to rely on the familiar digital camera as the foundation of computer vision. This camera holds light-sensitive areas of crystal silicon called photosites that absorb photons and release electrons, thus converting light to an electrical signal which can be processed with increasingly sophisticated computer image recognition programs. In the case of a typical smartphone camera, it uses upwards of 10 million photosites, each only a few microns square. Owing to that, the stated camera is able to capture images at a far higher resolution than our own eyes can do. However, all that data isn’t necessary for many of the tasks which use computer vision. Enter Purdue University’s latest brainchild. Named as organic electrochemical photonic synapse, the new device in question is relatively low resolution, offering optimal suitability to sensing movement. According to certain reports, it currently houses 18,000 transistors on a 10-centimeter square chip. Using the said setup, it delivers at our disposal a resolution of few hundred microns. That being said, the system can be fine-tuned over time to achieve a resolution of about 10 microns.

“Our eye and brain aren’t as high resolution as silicon computing, but the way we process the data makes our eye better than most of the imaging systems we have right now when it comes to dealing with data. Computer vision systems deal with a humongous amount of data because the digital camera doesn’t differentiate between what is static and what is dynamic; it just captures everything,” said Jianguo Mei, the Richard and Judith Wien Professor of Chemistry at Purdue University’s College of Science.

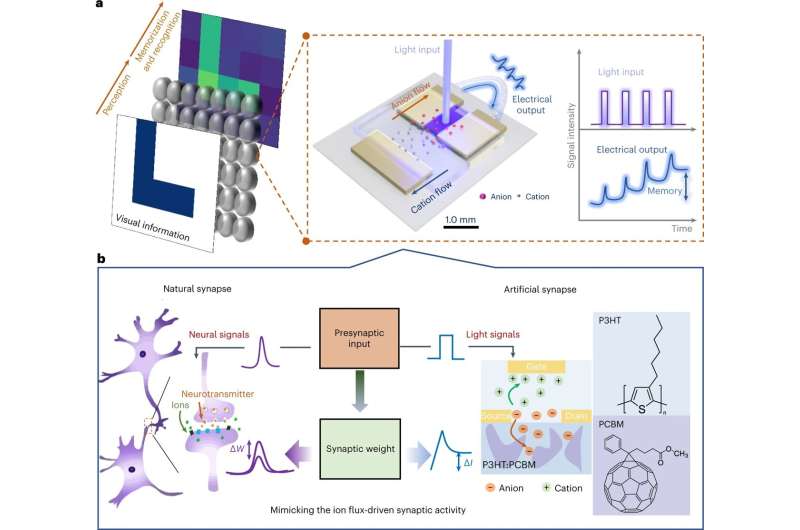

Interestingly enough, the researchers are understood to have got the idea for their innovation through light perception in retinal cells. This means light triggers an electrochemical reaction in their prototype device. The stated reaction would strengthen steadily with repeated exposure to light and dissipate slowly when light is withdrawn, therefore creating what is effectively a memory of the light information the device received. Such a memory can be used to reduce the data amount that must be processed to understand a moving scene. A ripple effect of the same is a mechanism which is more energy efficient and error-tolerant than those conventional computer vision systems.

Another detail worth a mention here is that, rather than going straight from light to an electrical signal, the new device first converts light to a flow of charged atoms called ions. Again mimicking the process that retinal cells use to transmit light inputs to the brain, the whole thing is made possible by one small square of a light-sensitive polymer embedded in an electrolyte gel. In practice, whenever light hits the spot on polymer square, it attracts positively charged ions in the gel to that spot (and repels negatively charged ions) so to create a charge imbalance in the gel. Considering that repeated exposure to light increases the given charge imbalance, it allows you to differentiate between the consistent light of a static scene and the dynamic light of a changing scene. Furthermore, when the light is removed, the ions remain charged for a short period of time, in a temporary memory of light, before gradually returning to a neutral configuration.

“Computer vision systems use a huge amount of energy, and that’s a bottleneck to using them widely. Our long-term goal is to use biomimicry to tackle the challenge of dynamic imaging with less data processing,” said Mei. “By mimicking our retina in terms of light perception, our system can be potentially much less data-intensive, though there is a long way ahead to integrate hardware with software to make it become a reality.”

Talk about the process a little more, the positively charged spot serves as the gate on a transistor, allowing a small electric current to flow between a source and a drain in the presence of light. This electric current is then passed to a computer for image recognition. However, while the output of an electric current is the same, it is the intermediate step of converting light to the electrochemical signal that creates motion sensing and memory capabilities.

For the future, the researchers’ plan is to conceive the technology with the help of flexible material, and if that is achieved, we can eventually expect a version which would be wearable and even bio-compatible.

“In a normal computer vision system, you create a signal, then you have to transfer the data from memory to processing and back to memory; it takes a lot of time and energy to do that. Our device has integrated functions of light perception, light-to-electric signal transformation, and on-site memory and data processing,” said Ke Chen, a graduate student in Mei’s lab and lead author of a Nature Photonics paper that tested the device on facial recognition.