Human beings have the means to excel in a lot of different areas, and yet what they remain absolutely best at is improving on a consistent pace. This unwavering commitment towards growth, no matter the situation, has brought the world some huge milestones, with technology emerging as quite a major member of the group. The reason why we hold technology in such a high regard is, by and large, predicated upon its skill-set, which guided us towards a reality that nobody could have ever imagined otherwise. Nevertheless, if we look beyond the surface for one hot second, it will become abundantly clear how the whole runner was also very much inspired from the way we applied those skills across a real world environment. The latter component, in fact, did a lot to give the creation a spectrum-wide presence, and as a result, initiated a full-blown tech revolution. Of course, the next thing this revolution did was to scale up the human experience through some outright unique avenues, but even after achieving a feat so notable, technology will somehow continue to bring forth the right goods. The same has turned more and more evident in recent times, and assuming one new discovery ends up with the desired impact, it will only put that trend on a higher pedestal moving forward.

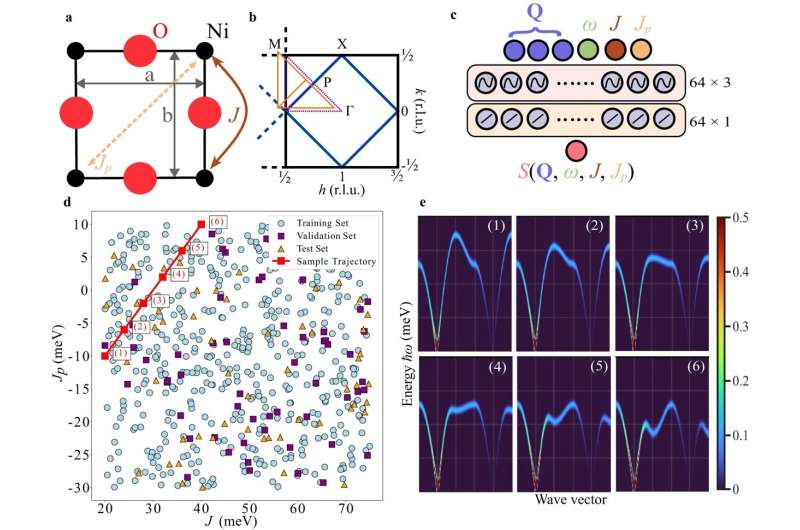

The researching at the Department of Energy’s SLAC National Accelerator Laboratory, originally named Stanford Linear Accelerator Center, has successfully developed a a new approach to look deeper into the complex behavior of materials, and therefore, streamline the experiments happening across the board. According to certain reports, the team leveraged a new data-driven tool created with help from “neural implicit representations,” a machine learning development mechanism used in computer vision and different scientific fields, such as medical imaging, particle physics and cryo-electron microscopy. Anyway, given the capabilities in play, the researchers were able to interpret coherent and collective excitations, alongside swinging of atomic spins within a system. Now, before we dig any further, we must under what these collective excitations do. Basically, they help scientists better understand the rules of systems like magnetic materials that tend to have many parts. Enabling the most granular level view possible, the stated element informs researchers on any peculiar behaviors, including tiny changes in the patterns of atomic spins, showcased by the material on hand. Up until recently, every effort to study these excitations have involved either inelastic neutron or X-ray scattering, translating to techniques that aren’t just too intricate for a more universal application, but they also demand a huge amount of resources. Fortunately enough, machine learning delivers at our disposal a much more efficient route. That being said, the new development has some distinctions here as well. You see, previous experiments used machine learning techniques just to enhance the accuracy of X-ray and neutron scattering data interpretation, thus leaving a lot of technology’s potential untapped. Furthermore, they also relied upon traditional image-based data representations, representations that are here replaced using neural implicit representations. Talk about the said representations, they use coordinates, like points on a map, as inputs. In practice, the importance of this feature shows up when the technology would predict the color of a particular pixel purely based on its position. Another detail worth a mention here is how the approach doesn’t directly store the image but creates a recipe to interpret it using a connection between the pixel coordinate and its color. The feature ensures that it can make detailed predictions, even between pixels. Given their clearly comprehensive nature, these predictive models have proven effective in capturing intricate details in images and scenes, a skill which can go a long way in terms of analyzing quantum materials data.

“Our motivation was to understand the underlying physics of the sample we were studying. While neutron scattering can provide invaluable insights, it requires sifting through massive data sets, of which only a fraction is pertinent,” said co-author Alexander Petschm, a postdoctoral research associate at SLAC’s Linac Coherent Light Source (LCLS) and Stanford Institute for Materials and Energy Sciences (SIMES). “By simulating thousands of potential results, we constructed a machine learning model trained to discern nuanced differences in data curves that are virtually indistinguishable to the human eye.”

During the initial tests on the technology, the researchers showed that their approach could continuously analyze data in real time. This specific feature also equipped them with an ability to determine when they’ve enough data to end an experiment, marking a step away from the old approach of relying on intuition, simulations, and post-experiment analysis to guide the study’s next steps.

“Machine learning and artificial intelligence are influencing many different areas of science,” said Sathya Chitturi, a Ph.D. student at Stanford University and co-author on the study. “Applying new cutting-edge machine learning methods to physics research can enable us to make faster advancements and streamline experiments. It’s exciting to consider what we can tackle next based on these foundations. It opens up many new potential avenues of research.”