Introduction

Applying machine learning to pricing processes is becoming easier due to advances in technology and increasingly accessible platforms. While complex algorithms seem to be at odds with the transparency and judgment needed in actuarial and pricing practices, these concepts can be highly complementary if machine learning is implemented correctly. In this paper, we will explore the necessity and possibilities of machine learning applications in the insurance industry, and provide guidelines for incorporating machine learning techniques into pricing applications.

Limited Data and the Intended Purpose of Machine Learning

One common pushback to employing modeling or machine learning techniques is the lack of sufficient data. This viewpoint is often based on antiquated assumptions about machine learning algorithms and the intended purpose of their output. First, a traditional method of addressing thin data is to apply credibility considerations to it in order to avoid overreacting to noise in the data. Machine learning techniques like penalized regression behave consistently with standard credibility models. This means that machine learning techniques can limit the noise from thin data and can be used on datasets that were previously out of scope due to their small size. Second, the intended purpose of a machine learning analysis can be expanded from creating final implementation recommendations to providing teams with the best data-driven point to start additional analysis. Making this adjustment allows machine learning techniques to be applied to everyday work and provides teams with the best, most data-driven starting point for analysis. Finally, the increased ease of applying these credibility-based machine learning techniques will allow pricing teams to adopt these new tools without significant investment. The use of machine learning algorithms can begin when a team is working with small datasets and will evolve as the team and company grow.

For small datasets, machine learning algorithms may be used to identify the starting point for continued pricing analysis. Machine learning can determine the characteristics, directionality, and approximate magnitude of the changes required to correct for poor experience in a rating plan. The algorithm will provide a shortcut by quickly identifying potentially hidden problem areas without hours of dashboard analysis.

As lines of business grow, analysis of medium-sized datasets can also evolve. By using the existing rating plan as a starting point, machine learning techniques can be applied effectively on datasets that would previously be out of scope. While the data may still not be credible enough to build a rating plan from scratch, it is likely sufficient to fully support the direction and magnitude of the proposed changes.

Finally, with large datasets, machine learning will fully support the creation of a new rating plan and can be used for advanced analysis. This is typically the step that people jump to when considering the application of machine learning techniques, but it is not the starting point!

Machine learning has too much potential for pricing departments not to take advantage of it in every applicable analysis. On increasingly small datasets, analysts will benefit by starting with a purely data-driven machine learning model that immediately identifies signals coming from the data, and informs future analysis.

Using Machine Learning for an Efficient Frontier

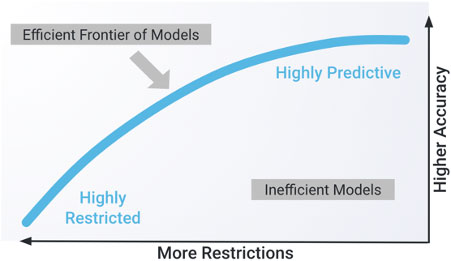

As an extension of the exploration of machine learning models, we should reconsider our viewpoint on a model’s output as well. It is assumed that the output of a model is always a point estimate – but this is not necessarily true! Machine learning techniques can address different levels of constraints and achieve different outcomes. When business decisions need to be applied to a model, it may be best for the machine learning technique to produce an efficient frontier of outputs based on various assumptions. To do this, teams can take advantage of the automation provided by machine learning and run a series of models with assumptions ranging from most to least restrictive from a business perspective. The result will be a chart similar to the one below.

Business decisions are rarely black and white. Usually, there is a range of reasonable actions that can be implemented to address a problem, and the “best” solution is a matter of opinion. Similarly, the efficient frontier approach provides teams with the opportunity to identify a “range of reasonable models.” Within this range, actuarial and business judgment can be applied to determine the best course of action. A point estimate does not allow for this judgment to be easily applied.

Business decisions are rarely black and white. Usually, there is a range of reasonable actions that can be implemented to address a problem, and the “best” solution is a matter of opinion. Similarly, the efficient frontier approach provides teams with the opportunity to identify a “range of reasonable models.” Within this range, actuarial and business judgment can be applied to determine the best course of action. A point estimate does not allow for this judgment to be easily applied.

The Changing Actuarial Experience

Moving from a manual process to a machine learning modeling process will change the working experience for an actuary. Often, this results in a perceived – but not actual – loss of control due to automation. The separation of purely data-driven decisions and business decisions is key when adopting a machine learning approach.

For example, the tedious process of deciding where to cap a characteristic such as policyholder age based on limited credibility is often a judgmental decision. However, the optimal placement of this cap is a purely data-driven decision that should be automated to remove human bias from the process. After seeing this data-driven result, a user can change this data-driven selection if necessary for business decisions. This is not a lack of control – but rather a more informed starting point to exercise control.

The majority of the day-to-day actuarial experience will shift from manual creation of data analysis to interpreting the output of automated data analysis. This is not to say that actuarial teams will require less technical knowledge – to fully understand the assumptions of these machine learning algorithms, an actuary will still need sufficient technical skills. The removal of this busywork will simply allow an actuarial team to use their technical skills in more meaningful and interesting ways.

The Necessity of Transparent Algorithms for Actuarial Analysis

This paper has discussed the necessity of interpreting automated decisions and creating an efficient frontier of actions to evaluate, but so far has neglected to mention their prerequisite, which is transparent machine learning. When selecting between similar models, a user needs the ability to determine which model is the most appropriate for implementation given business considerations.

This differentiation is quite difficult with black box models, as it may not immediately be clear what is happening within the black box to cause the models to produce different scores for similar risks. When using black box models, an actuary must also take into consideration the possibility for hidden behaviors that are unintuitive at best and dangerous at worst. Within a black box model, it is nearly impossible to prove that unintuitive behavior is not happening. An actuary would need to perform sufficient testing to prove that the likelihood or effect of unintuitive behavior is immaterial or at an acceptable level of risk. This is a time-consuming and necessarily imperfect process that can be avoided with transparent algorithms.

Due to the less interpretable nature of black box methodologies, the likelihood of identifying an unintuitive behavior late in the work cycle will increase. Additionally, black box methodologies may make explanations to internal and external stakeholders difficult, and their questions on the calculation of an insured’s score may not have an intuitive or helpful response. Transparent machine learning algorithms allow an actuary to easily interpret the actuarial soundness of a model and greatly speeds up the internal and external approval process.

Equity, Fairness, Bias, and Transparent Algorithms

Machine learning cannot be discussed without also acknowledging the topic of algorithmic bias. How to consistently avoid disparate impact while setting equitable and competitive rates is a current discussion and research topic in the US insurance and regulatory environment, and this paper will not propose a specific methodology to address it. Transparent machine learning technology will also not miraculously solve this problem, but it will allow rates and models to be comprehensively analyzed and their reasoning fully understood. For each rating variable, the exact impact is understood and easily measured. “Why did this happen?” is an easy question to answer with transparent models.

On the other hand, black box machine learning techniques make the already difficult problem of equity and bias even harder to solve by obfuscating the calculation and justification for rates. There are increasingly sophisticated ways to look at the exports of a black box, but in the end, a modeler needs to trust that they’ve explored everything. What happens when an unanticipated edge case appears in practice? How can we be sure that the black box is unbiased in both the aggregate and at all individual levels? With transparent models like penalized GAMs, questions on model behavior are easy to answer. With a GBM on the other hand, the explanation of a rate calculation is opaque.

While it is uncertain how the industry will move forward to address issues of equity and disparate impact, transparent AI clearly has an advantage over black box methodologies in the ability to be explained and validated. Transparent machine learning algorithms will still be as biased as the data going into them – but this bias is not hidden by black box methodology. The effect of all variables will be visible to the actuary and regulator and can therefore be properly addressed. Transparent machine learning algorithms are necessary for insurance companies and regulatory bodies that want to address possible biases in insurance models.

Conclusion

Machine learning is quickly entering the insurance space, and actuaries can benefit greatly from its adoption in pricing processes. Expanding the use and purpose of machine learning analysis will open exciting new opportunities. Adopting an easy-to-use platform for machine learning will be key in allowing actuaries to use machine learning to its fullest potential. An analyst will not frequently use machine learning techniques if they need to set up a codebase from scratch for every application and maintain the codebase across multiple platform updates. Machine learning algorithms should be as accessible to actuaries as spreadsheets.

The proper application of machine learning will meaningfully change a team’s work experience, but it will not dilute the importance of industry knowledge. Machine learning algorithms will quickly provide data-driven insights so users can apply their industry knowledge to the results and drive company strategy. Machine learning is no longer just a forward-looking buzzword – it is now ready to be broadly applied to pricing analysis.

About Akur8

Akur8 is revolutionizing non-life insurance pricing with Transparent Machine Learning, boosting insurers’ pricing capabilities with unprecedented speed and accuracy across the pricing process without compromising on auditability or control.

Our modular pricing platform automates technical and commercial premium modeling. It empowers insurers to compute adjusted and accurate rates in line with their business strategy while materially impacting their business and maintaining absolute control of the models created, as required by state regulators. With Akur8, time spent modeling is reduced by 10x, the models’ predictive power is increased by 10% and loss ratio improvement potential is boosted by 2-4%.

Akur8 already serves 100+ customers across 40+ countries, including P&C global carriers AXA, Generali, Munich Re, Europ Assistance, Tokio Marine and MS&AD; commercial P&C insurers TMNAS, FCCI, NEXT, HDVI and Canal; personal and commercial P&C insurers Cypress, Madison Mutual and Western Reserve Group; and specialty P&C insurers Canopius and Bass Underwriters. Over 900 actuaries use Akur8 daily to build their pricing models across all lines of business. Akur8’s strategic partnerships include Milliman, Guidewire, Duck Creek and Sapiens.